<<Previous – Up – Next>>

If Computer Art was the artistic outcome of several interlinked art movements, it also built on very recent developments in computer technology, both in the United States and Western Europe. The generation of artists who used analogue computers, such as Ben Laposky and Whitney, is closely linked with those who worked with the early digital machines, for instance, Charles Csuri and A. Michael Noll. However, while analogue computers emerged in the late 1960s as a range of video synthesisers and TV graphics machines, digital computers were deployed for Computer Aided Design and other drafting applications.

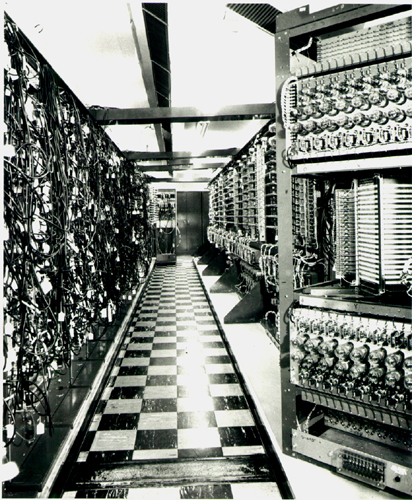

This in turn stemmed from the digital computer’s origins in military defence systems, going back to MIT’s U.S. Navy-funded Whirlwind system in the late 1940s.

MIT Whirlwind c.1949

As related by Norman H Taylor, who worked on the Whirlwind from 1948, the very first display was a representation of the machine’s storage tubes, the primitive form of memory used at that time. By running through each tube in turn, the program would show which ones were working or not. The programmers then wanted a way of addressing the non-working tubes, so Bob Everett invented a “light gun” to place over the points on the screen and identify the relevant tube. Norman Taylor believed this was the very first “man machine interactive control of the display”; the light cannon could also place or erase light spots on the screen, thus creating the MIT logo by direct interaction in 1948.

This graphical display immediately attracted the MIT public relations department and news organisations; Taylor presciently noted: “it was clear that displays attracted potential users; computer code did not.”[1]

More graphical programs followed, including the Bouncing Ball animation and a primitive “computer game” where this ball could be made to fall through a hole in the “floor” by means of turning frequency knobs to control its bouncing.

In 1949 or 50, a student called Dom Combelec used this system for designing the placement of antennae, creating patterns of distribution. The Whirlwind’s impact was considerable: so many visitors came to see it in the early 1950s (over 25,000) that the computing group had to set up a department to handle the visitors. They were mainly attracted by the displays and the novel means of interacting with the computer. As Taylor put it:

It was a little dull when you just put in numbers and got out numbers. But when we got the display started, it changed the whole thing, and I think that meant not only the bandwidth you can get out of a display system, but the man machine interaction of the light pen seemed to excite people beyond comprehension.[2]

From the Whirlwind, which had 5,000 tubes and occupied around a quarter-acre, the Lincoln Laboratory developed the SAGE air defence system, which used points of light to track radar traces of aircraft flying over the US coastline. (Taylor slide 13). The system’s terminals consisted of a circular screen, operated using a light gun and rows of switches. These were active from 1956 until 1978, with no less than 82 consoles connected to the central computer.

The Whirlwind was replaced by the TX-0, which used transistors and featured a more advanced display. It also had a wider range of programs available, and a refined version of the light gun: a smaller, lighter version called the light pen which was developed by Ben Gurley, later involved with the DEC PDP-1. Jack Gilmore described how the simulation of a scientific workstation with a grid of symbols led to a primitive drawing program, where the pictorial elements of lines and shapes were moved around with the light pen.[3]

TX-0 console

Soon, Gilmore and his team realised that, as well as characters, “we could literally produce pieces of drawings and then put them together […] we actually developed a fairly primitive drawing system.” [4] Because they had developed the concept of cutting and pasting text around the screen, they developed a “tracking routine” for the light pen so it could move these text strings.[5]

The first scanner was invented at the National Bureau of Standards in 1957 and attached to their SEAC computer. The ability to input images from outside the computer would transform the nature of computer graphics; however, this first machine was designed to scan text:

It occurred to me that a general-purpose computer could be used to simulate the many character recognition logics that were being proposed for construction in hardware. A further important advantage of […] such a device was that it would enable programs to be written to simulate the […] ways in which humans view the visible world.[6]

In a move that would have serious and lasting consequences for digital images, the SEAC group decided to make the scans into binary representations, broken up into picture elements or pixels, of uniform size. The reasons were mainly connected with hardware limitations, as is often the case with computer standards established early on, which later restrict more advanced developments:

Several decisions made in our construction of the first scanner have been enshrined in engineering practice ever since. Perhaps the most insidious was the decision to use square pixels. Simple engineering considerations as well as the limited memory capacity of SEAC dictated that the scanner represent images as rectangular arrays […] No attempt was made to predicate the digitization protocol on the nature of the image. Every image was made to fit the Procrustean requirement of the scanner. [7]

The SEAC scanner c.1957

So as early as 1957 a basic format of the digitised image had been put in place, though most of the computer images of this period were vector graphics. In fact, the impetus for vector-based displays came from the field of Computer-Aided Design, because the computer was initially employed for industrial CAD applications.

The following year, another signal development took place using computer graphics for the most distinctive computer entertainment format: the computer game. Although the 1962 game “Spacewar” on the DEC PDP is generally held to be the first of its kind, it was preceded by “Tennis for Two”. This was devised in 1958 by physicist Willy Higinbotham at the Brookhaven National Laboratory, an atomic research station on Long Island. As part of a science education campaign to reassure local residents about the research work, Higinbotham hit upon the idea of making a simple computer tennis game that drew on graphics work with analogue computers.

Transistors simulated the effects of gravity and force, and a ball travelled between two paddles; even wind drag was included. It was highly successful, with people queuing for two hours to play it; yet it had only a localised impact and was withdrawn when the science exhibit was revised in the early 1960s.[8] This testifies to the swift comprehension of the computer’s potentials amongst the engineers who created it; [significantly, this game predates the first interactive Computer Art by at least six years.]

Tennis for Two

Lawrence G. Roberts, meanwhile, was working on scanning photographs with the TX-0’s successor, the TX-2. As part of this work, he wanted to find ways of processing 3D information contained in these images into true 3D graphics. Roberts had to find ways of representing 3D images, taking in a range of theories speculating how humans perceived in three dimensions. In the course of this work, he developed the “hidden line display capability”, which basically hides any lines invisible to the viewer on a 3D object.

Roberts also needed a way of displaying objects within a perspective view, for which he had to integrate perspective geometry with the matrices that described the coordinates of the object’s component points. As Roberts recalled, he looked at the perspective geometry of the 19th century to see how perspective objects were displayed, and then introduced matrices so it could be represented on a computer. His main point was that contemporary mathematicians had little interest, indeed knowledge, of the area: he had to return to the earlier texts to discover how it was achieved. But the integration of the two areas was his achievement, and out of this came the field of “computational geometry”.[9]

By combining the two, Roberts created the “four dimensional homogenous coordinate transform”, which is the basis for perspective transformations on the computer.[10] The images shown here are some of the earliest, and even with the primitive hardware of the period, Roberts was able to rotate the images almost in real-time. This was the beginning of true three-dimensional imagery on the computer. As Binkley says:

Computational algorithms or picturing do not require placement in any real setting; indeed, if one wants to depict an actual object, the first step is to abstract its shape from the real world of a selected coordinate space [note that 3D programs often start with Platonic solids and make “real” objects by building with them or deforming them]. The object must be described using numbers to fix its characteristics (XO, YO, ZO). The picture plane is similarly determined with points or an equation (z =ZP), and the point of view simply becomes an ordered triple (XV, YV, ZV)[11]

Because the computer’s three-dimensional space derives directly from projective geometry, as outlined by Roberts, its heritage is not only pictorial space, but the plans and diagrams that model components and buildings in physical reality. In other words, there has to be a direct correspondence between points in computer space and in physical space, to allow for the accurate manufacture of physical objects. This has led to a close reciprocity between physical and digital; yet one of the major uses of the computer’s modelling abilities has been to create “realistic” images of fantastic creatures and fictional settings. It is an interesting paradox that systems intended to simulate realistic appearances have more often been employed for fictional and imaginative purposes. However, this would seem to be inherent in the notion of simulation.

Also at this time, Steven Coons was developing algorithms for describing surfaces through parametric methods. He also considered how best to produce “a system that would […] join man and machine in an intimate cooperative complex” – a CAD system.[12] Coons described a setup not unlike the SAGE terminals, with an operator working on a CRT screen with a light pen.

[Plate VIII: Ed Zajec, Satellite Trajectory, 1960/1]

Here is a starting point for direct interaction with the computer: blocks of graphics being dragged into place with a light pen to create larger diagrams, taking place on a CRT display that enabled all actions to be viewed in real-time. Obviously, its primitive nature cannot be overstressed: in no way was the output of this machine considered “art”, nor can it be seen as such in retrospect. But it was pioneering and helped to pave the way directly for Ivan Sutherland’s seminal Sketchpad program of the early 1960s.

Ivan Sutherland demonstrates the Sketchpad

Sketchpad was produced on the TX-0’s successor, the TX-2, which was much more graphics-oriented than its predecessor. Gilmore recalled its use for simulating planets and gravity, so that their motion around the sun, and the moons around the planets, could be observed. Their velocity and acceleration could be controlled with the light pen, another real-time usage.[13]

This was an early exercise in computer animation and simulation, and in some ways pointed the way towards interactive video games, as did SPACEWAR, an early attempt in this direction on the DEC PDP-1 in 1961. Certainly, the TX-2 was quite capable in the area of graphics and manipulation. The DEC machine was also used by Gilmore as the basis of an Electronic Drafting Machine -- EDM -- for the ITEK corporation.

The ITEK EDM was based on the PDP-1. This was developed by Norman Taylor, Gilmore and others from 1960-62, and was intended for use in the architectural and engineering industries. Using the light pen and drafting software, the operator could draw lines, circles and other pictorial elements, as well as specifying distances and angles. They could also link these elements together to produce sub drawings, or macros, that could be copied and reflected around each axis. The interaction of light pen and visual display created “the illusion of drawing on the CRT with very straight-edged tools.”[14] Thus the concept of the drawing program, and of direct interaction, was in place by 1962 when Time magazine reported on the ITEK in its March 2nd edition.

This system evidently contained in embryo many of the techniques that are now familiar to all computer-based illustrators and CAD users. Basically, it assisted the draftsman with the more time-consuming operations or those which required the pinpoint accuracy that only a computer could supply; its functionality was constrained by its assigned task and there is no evidence that the EDM was used directly in any Computer Art projects. However, it did influence the design of illustration and CAD software and was sold to Lockheed Aircraft, Martin Marietta and the U.S. Air Force.

It is also interesting to note the transition to CAD/CAM (Computer-aided Manufacture) systems as described by Pierre Bézier, who developed the ubiquitous Bézier curve which describes a curve between two points. Bézier worked on Renault’s in-house CAD system during the late 1960s-1970s, which was intended to be an interactive program capable of being used by the designers, to produce drawings and then prototype them as models. Such systems connected the freeform world of design with the physical realm; as Bézier put it, they “came from the ability to work, think, and react in the rigid Cartesian world of machine tools and, at the same time, in the more flexible, n-dimensional parametric world.”[15] Thus the computer design package was grounded in “real-world” requirements from the start, especially the need for three-dimensional positioning and accurate dimensions.

The market for such design systems in the 1960s was limited to those with the funds to acquire room-filling computers and specialised technicians, and thus the main customers were the military and large university labs. Indeed, the military’s input into early graphics computers was crucial, because they needed machines with graphical displays in order to co-ordinate their defences and as visual systems in aircraft cockpits. In fact, the term “computer graphics” was coined by William Fetter of Boeing for his cockpit displays.

In summary, many conventions of computer graphics were laid down early on, and in spite of over 30 years’ refinements -- including the revolution caused by cheap powerful desktop computers that has placed this technology within reach of most people -- we are still using direct descendants of these initial systems. Of course, the experimental nature of these systems should not be forgotten. As Herbert Freeman noted, the need for a system that “in some sense would mirror the often barely realized but visually obvious relationships inherent in a two-dimensional picture” required special algorithms for generating shapes, hiding lines and shading. These all required powerful computers and stretched the capabilities of contemporary machines.[16]

These problems meant that most computer graphics research was carried out in labs where there was access to the most powerful mainframes available -- universities and military establishments. Many of the initial difficulties listed by Freeman were overcome by sheer inventiveness on the part of engineers and programmers, and nowhere was this more apparent than in the case of Sketchpad.

Ivan Sutherland’s system seemed to spring fully-formed into existence, with all the accoutrements that we have come to expect from modern graphics packages. Working with the capable TX-2 computer, this graduate student at MIT created over the course of two years from 1961 to 1963 a comprehensive basis for all succeeding two-dimensional vector graphics software, and which had no small influence on three-dimensional projects as well.

The connection between physical action and its corresponding screen-based effect is fundamental to the GUI. When Sutherland introduced Sketchpad,the promise was that using the computer would become as transparent as drawing on paper. Freeman sees Sutherland’s system as the first in an evolutionary line that, via the Xerox STAR would lead to the Apple Macintosh and thence to Microsoft Windows, becoming the most widespread form of Human-Computer Interaction: “it was not until Sutherland developed his system for man-machine interactive picture generation that people became aware of the full potential offered by computer graphics.” [17]

[Plate IX: Ivan Sutherland working with the first version of Sketchpad c.1961.]

This was certainly true of the young Andries van Dam, who saw a demonstration of Sketchpad in 1964; a film that Sutherland had released. It impressed him so much that he switched his course to the nascent field of computer graphics. Later, in 1968 he witnessed Doug Engelbart’s amazing demonstration of “window systems, the mouse, outline processing, sophisticated hypertext features and telecollaboration with video and audio communication”.[18] Van Dam in no way overstates the importance of the GUIs that resulted from Engelbart’s early work when he asserts that the PC would be nowhere near as pervasive or successful without it.

[Picture: Doug Engelbart’s first patent application for the mouse, showing a computer setup almost identical to modern desktops. Also, the first mouse prototype, 1968.]

Engelbart's original mouse patent

By the 1970s, the windowing system prototyped by Engelbart was being developed by Xerox’s Palo Alto Research Center (PARC). The Graphical User Interface was adopted by a number of operating systems in the early 1980s, most famously the Apple Macintosh, and thereafter the computer’s use in graphical fields grew exponentially.

After SEAC defined the concept of a “pixel”, there were a number of attempts to create paint programs which addressed them directly. Joan Miller at Bell Labs implemented a crude paint program so that users could “paint” on a frame buffer, in 1969-70; then Dick Shoup wrote SuperPaint, the first complete computer hardware and software solution at Xerox PARC in 1972-3. This contained all the essential elements of later paint packages: it allowed a painter to colour and modify pixels, using a palette of tools and effects.[19] In a sense, this harked back to the Whirlwind developers writing “MIT” with their light gun, except that the increased power of 1970s workstations allowed for much more complex graphics.

In 1979, Ed Emshwiller at the New York Institute of Technology created his ground-breaking computer animation Sunstone using the Paint package created by Mark Levoy at Cornell on a 24-bit frame buffer.[20]

Sunstone marked the beginning of the maturity of computer animation and paint systems. Around this time they began to supplant the Scanimate and other analogue graphics computers. With the development of Lucasfilm’s paint package into the first version of Photoshop, this form of graphics software was combined with photo-manipulation and became widespread on desktop computers.

By the end of the 1960s, then, the major components of computer graphics were in place; despite the limitations of the hardware, they were conceptually established and later graphics systems refined their usage and realisations.

[1] “Retrospectives I: The Early Years in Computer Graphics” SIGGRAPH 89, panel sessions

[2] “Retrospectives” ibid, p38.

[3] “Retrospectives II”, ibid, p47

[4] SIGGRAPH ‘89 panels… “Retrospectives II”

[5] SIGGRAPH ‘89 panels… “Retrospectives II”

[6] Russell A. Kirsch, “SEAC and the Start of Image Processing at the National Bureau of Standards”, IEEE Annals of the History of the Computing, Vol.20, No.2 1998, p10.

See also http://www.sciencecodex.com/fiftieth_anniversary_of_first_digital_image

[7] Russell A. Kirsch, ibid

[8] “LECTURE on LOW BIT GAMES” William Linn, May 4th 98, Linz Harbour, www.timesup.org/Obsolete/lectureBolt.html

[9] “Retrospectives II”, ibid, p72

[10] “Retrospectives II”, ibid, p59

[11] Timothy Binkley, “The Wizard of Virtual Pictures and Ethereal Places,” Leonardo: Computer Art in Context supplemental issue, 1990

[12] IEEE Annals of the History of Computing, Vol.20, No.2, 1998, p21, quoting Coons from MIT article

[13] SIGGRAPH ‘89 Panel Proceedings, ibid

[14] “Retrospectives II”, ibid, p51

[15] IEEE Annals of the History of Computing, Vol.20, No.2, 1998

[16] Freeman, Herbert “Interactive Computer Graphics” IEEE Computer Society Press, 1980. Quoted by Wayne Carlson, [get web ref]

[17] Herbert Freeman, ibid

[18] van Dam, Andries “The Shape of Things to Come” ACM SIGGRAPH Retrospective Vol.32 No.1 February 1998

[19] “Digital Paint Systems: An Anecdotal and Historical Overview”, Alvy Ray Smith, IEEE Annals of the History of Computing, 2001, p6

[20] Alvy Ray Smith, ibid, p23